By Valentina Shin, Adobe Research

Video has become a staple of our everyday lives. From traditional TV commercials to online social media posts on YouTube, Snapchat, Vimeo or Facebook, millions of videos are produced and consumed each day. Not only professional businesses but, increasingly, also individual creators are choosing video as their medium of communication. But creating good videos is hard, especially if you don’t have a whole production crew to help you. Our new research effort seeks to enable individual video creators to produce more engaging video content with less effort, using a novel interface and helpful recommendations.

“Vlogs,” or video blogs, which are informal, journalistic videos produced by “vloggers,” are one such example. Vlogs usually consist of a self-made recording of the vlogger talking about a topic of interest, including personal stories, how-to tutorials or product marketing. They are an increasingly popular genre in social media with over 40 percent of internet users watching vlogs on the web. The most trendy vlog channels on YouTube have tens of millions of subscribers.

Vlogs mainly rely on two kinds of video content: A-roll and B-roll footage. A-roll is the main video shot, usually a headshot of the vlogger talking about a topic of interest, for example, Spanish foods; B-roll are the secondary “cutaway” scenes that support the main storytelling with added visual interest and context, for example, scenes of a restaurant or dishes of food. A key part of producing engaging vlogs is to incorporate good B-roll. B-roll is not unique to vlogs. In fact, it is a traditional technique that’s been used in film and TV productions. However, unlike professional production teams with a dedicated crew for B-roll, individual creators or vloggers rely on their own resources or online video repositories to create B-roll.

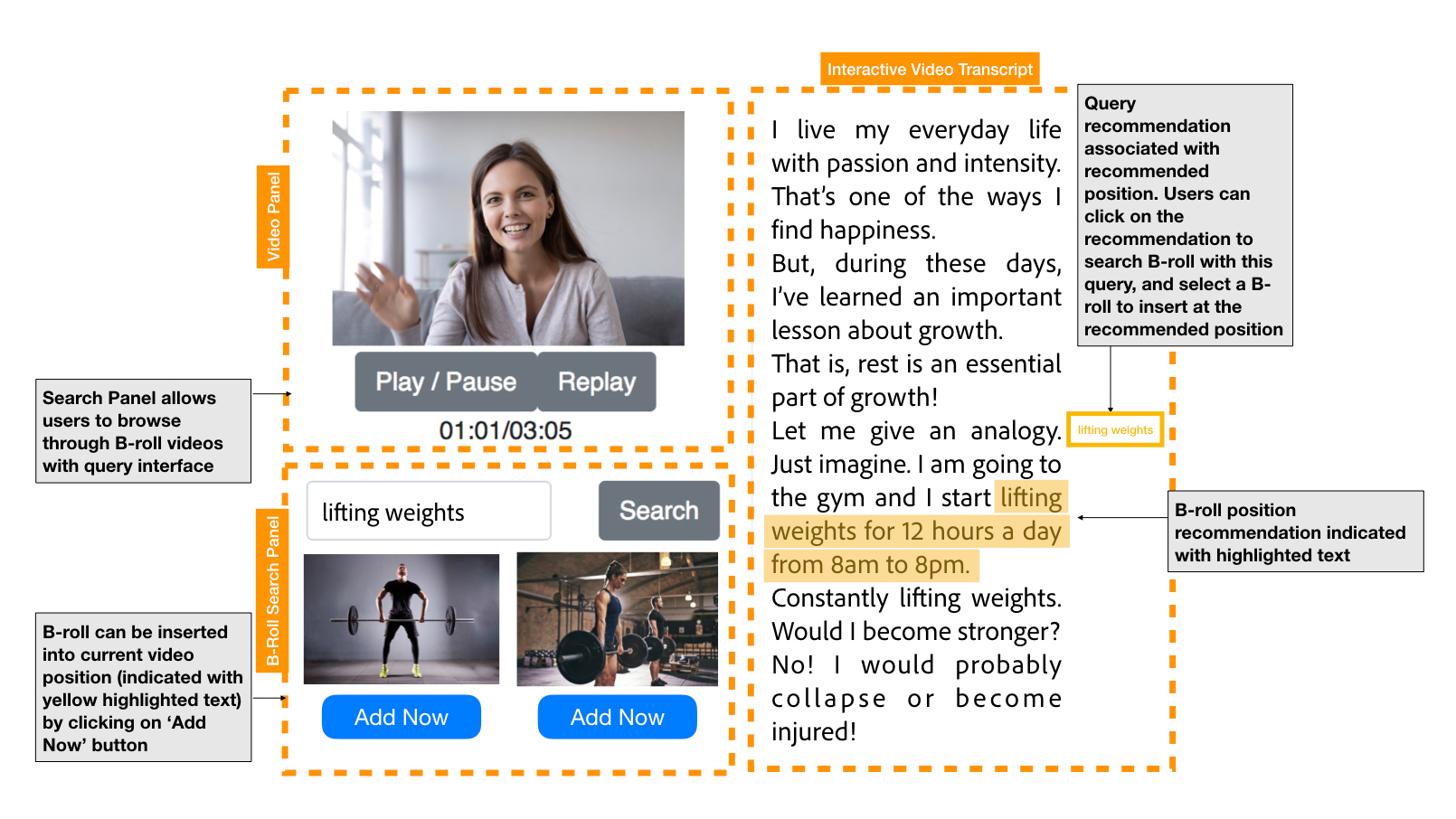

Figure 1. B-Script Interface. Users can navigate the video by clicking on a transcript, and can search for possible B-roll clips from external online repositories. Users can then add B-roll clips with the click of a button, and change their position and duration directly on the transcript. An automatic recommendation system identifies possible locations and search keywords in the video, and presents these to the user for easy edits.

In our work, “B-Script: Transcript-based B-roll Editing with Recommendations” which will appear at the Conference on Human Factors in Computing Systems (CHI) 2019, we present a novel video editing tool, which we named B-Script, that helps novice users search and insert B-roll into the main video. B-Script uses the transcript of the main video, which is obtained through speech recognition and shows the narration of the vlog word-for-word. It analyzes the transcript and recommends good places and key phrases that users can use to look up B-roll content. For example, in Figure [1], the vlogger is talking about stress management. Our system highlights the phrase ‘lifting weights for 12 hours a day from 8am to 8pm’ and recommends the key phrase ‘lifting weights.’

How does B-Script figure out good places and content for B-roll insertion? First of all, what do we even mean by good? In order to find out where and what type of B-roll experienced video editors insert, we asked 115 expert editors to create vlogs by inserting B-roll to existing talking-head videos. Interestingly, different editors chose similar locations to insert B-roll, which coincided with places where certain keywords were mentioned in the narration. The subject of the inserted B-roll were also closely tied to these keywords. For example, in a video about economics, most editors placed a B-roll depicting ‘money’ when the keyword ‘money’ was mentioned for the first time.

In order to predict B-roll-worthy keywords for a vlog, we used the data collected from the previous experiment to train a classifier. The classifier takes in as input the narration of the vlog, and then tells the user whether each word in the transcript is a keyword for a B-roll. The classifier takes into account information such as the part of speech of a word (e.g., noun, verb, adjective), whether a word occured for the first time in the narration, and the sentiment of the word. Finally, B-Script presents the selected keywords or key phrases for B-roll subjects, and highlights sections of the narration where corresponding B-roll can be inserted. Users can click on the highlighted transcript text or the recommended keywords to search for B-roll videos in online repositories.

In addition to the B-roll recommendations, B-Script provides a convenient text-based interface where users can insert and align B-roll within the main video using the transcript view. As shown in Figure [1], users can search for B-roll video content by clicking on a word in the transcript, then insert and adjust the B-roll position and duration directly in the transcript view. The intuitive text-based interface allows even novice users to quickly create engaging vlogs by incorporating B-roll videos.

Finally, we show that users can create more engaging videos with less effort using our interface and recommendations. Both the creators of the videos themselves and external raters rated the videos produced with our recommendations as better and more engaging than the videos produced without the B-roll recommendations.

In summary, we introduced a transcript-based recommendation system that supports B-roll video editing. The system allows novice users to easily create interesting vlogs by incorporating additional footage to supplement their stories. Our recommendation technique and text-based editing interface can be applied to other types of video production, such as educational videos to create more informative content for the viewers.

For more details about our approach and to view the system demo, please see our project webpage and paper.

Contributors:

Valentina Shin, Bryan Russell, Oliver Wang, Gautham Mysore, Adobe Research

Bernd Huber, Harvard University