By Meredith Alexander Kunz, Adobe Research

Computer vision, driven by the intelligence of deep neural networks, has made tremendous progress in identifying everyday objects. But new research by Adobe and Auburn University shows just how easily these AI networks can be fooled by the same objects—seen from different perspectives and angles.

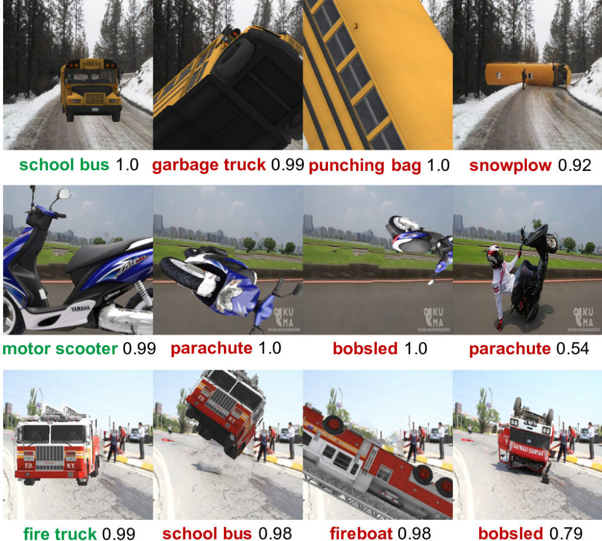

Long Mai, research scientist, worked with collaborators to uncover neural networks’ failures in figuring out well-known objects such as school buses, firetrucks, and motor scooters. When these items were shown at varying angles, such as turned from the side or pointing upwards—the kinds of perspectives that might be seen from a self-driving car hurtling towards an oncoming accident—the networks “saw” a bobsled, a punching bag, or a parachute instead of the correct vehicles.

“We’re working to understand the fundamental limitations of existing neural networks, and how to improve them,” Mai explains.

The researchers tested deep neural networks by using 3D models to create these unusual poses. They created “adversarial” examples of the imagery of the vehicles, in addition to stop signs, dogs, benches, and other roadside items, to challenge the system. The team focused on testing a state-of-the-art image recognition AI, the Google Inception v.3 network.

The extent of the system’s inaccuracy was surprising. The network incorrectly classified objects in 97 percent of their poses overall (these same objects were normally recognized in a typical straight-on pose).

“The median percent of correct classifications for all 30 objects was only 3.09 percent,” the group wrote in their paper, Strike (with) a Pose: Neural Networks Are Easily Fooled by Strange Poses of Familiar Objects, appearing at the June 2019 CVPR conference.

Other AI systems focused on object recognition did not perform that much better, authors found. When the adversarial system faced the Yolo v.3 object recognition system, 75.5 percent of the images that tricked Inception also fooled Yolo.

Paper authors concluded that these AI systems have a long way to go to truly “recognizing” what they are seeing. “In sum, our work shows that state-of-the-art DNNs perform image classification well but are still far from true object recognition,” they write.

This work shows the pitfalls of real-world uses for AI and neural networks. Despite their great performance on stationary test sets, these unusual kinds of object poses reflect a reality that these systems will need to reckon with for applications such as self-driving vehicles.

One promising path forward in improving these systems is to incorporate more training data and images that reflect the point of view of cars and trucks—the kinds of machines that may need to use this type of AI—rather than training images taken by humans with a human aesthetic. Adding many more adversarial, 3D-modeled examples could help, though creating such a database would be “costly and labor-intensive,” authors write.

Mai and his collaborators plan to continue to study how to reduce these kinds of deep neural network errors, working to build a better AI.

Contributors:

Long Mai, Adobe Research

Michael Alcorn, Qi Li, Zhitao Gong, Chengfei Wang, Long Mai, Wei-shinn Ku, Anh Nguyen, Auburn University

For more info: