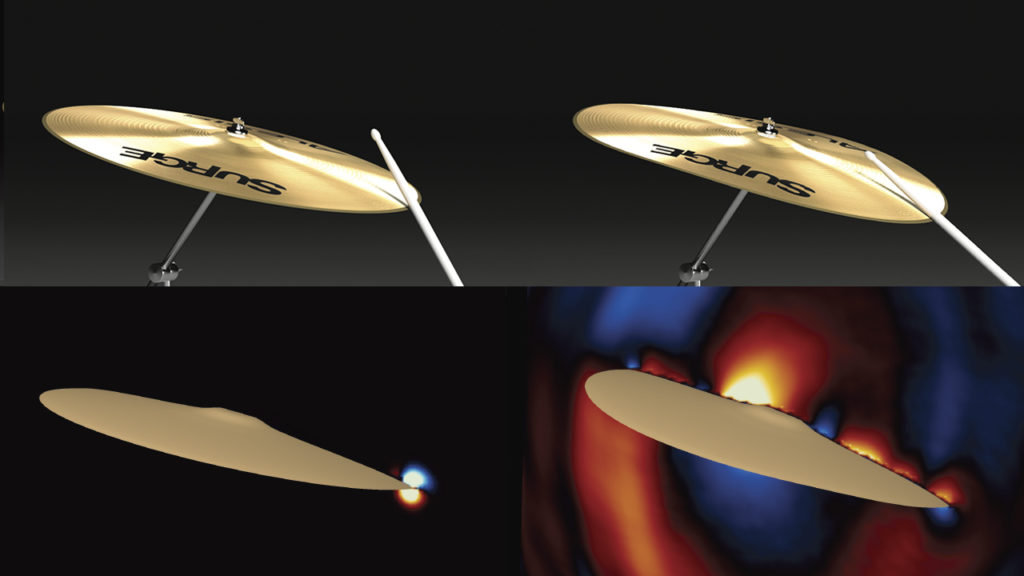

Scientists at Adobe Research and Stanford University have developed an advanced new system to create accurate sounds for a range of computer-generated animations—from water splashing to cymbals clashing.

The researchers, including Adobe Research’s Timothy Langlois, presented their sound synthesis work at August’s SIGGRAPH 2018, a leading conference on computer graphics and interactive techniques.

In addition to potentially creating realistic sound-tracks for movies and virtual reality, this system could also help engineers improve their prototypes for noise-making products before they are physically produced. (For example, designers could check if products will make irritating or loud sounds.)

“I’ve spent years trying to solve partial differential equations – which govern how sound propagates – by hand,” the paper’s lead author Jui-Hsien Wang, an Adobe Research intern and Stanford student, told Stanford News Service. “This is actually a place where you don’t just solve the equation, but you can actually hear it once you’ve done it. That’s really exciting to me, and it’s fun.”

The system relies on calculations of geometry and physical motion to determine the vibrations of objects, and how these vibrations create sound waves.

This approach could save a lot of time for engineers and animators. Today, most animation sounds originate in pre-recorded clips, which then require manual effort to synchronize with what appears on screen. Prior systems that produce and predict sounds as accurately as this project only perform in special cases or don’t take geometry into account in the same way. They also require lengthy pre-computations for each object.

In contrast, the new work is “essentially a render button with minimal pre-processing,” says Ante Qu, another co-author. Qu is a Stanford student and Adobe Research intern. Stanford’s Doug James, a computer scientist, collaborated on the project.

In its current form, the group’s process takes a while to create a finished product. The team plans to improve performance and speed by using parallel hardware and making other upgrades.

Contributors:

Timothy Langlois, Adobe Research

Jui-Hsien Wang, Ante Qu, Adobe Research interns and Stanford University

Doug James, Stanford University

By Meredith Alexander Kunz, Adobe Research

Story based on Stanford News Service

Image credit: Timothy Langlois, Doug L. James, Ante Qu, and Jui-Hsien Wang