By Meredith Alexander Kunz, Adobe Research

Computer vision research is moving at light speed. Computer scientists are now creating systems that can make the kinds of judgments about images that humans are very good at, but that have proved challenging for machines.

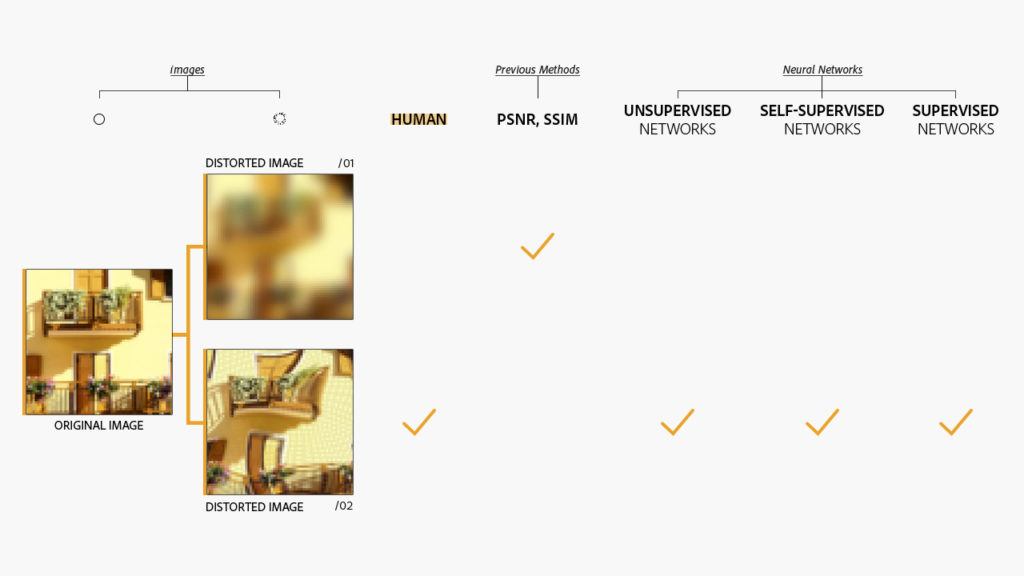

However, machines “see” images differently from humans, and common measures for image similarity do not correspond well with human perception.

Scientists from Adobe Research wanted to try a new approach. “The question we are asking is, can deep learning distinguish subtle differences between images more like humans do?” says Eli Shechtman, principal scientist, who worked with Oliver Wang, senior research scientist, and University of California, Berkeley, scholars on the research. (First author Richard Zhang, formerly of UC Berkeley, has since joined Adobe Research.) Their paper, “The Unreasonable Effectiveness of Deep Features as a Perceptual Metric,” will be presented at CVPR 2018 in June.

Deep Learning Outperforms Previous Approaches

The team found that deep learning networks—a form of artificial intelligence—can “see” the images in a more human-like way than previous methods. Interestingly, this is even true for networks that are trained for unrelated tasks, such as classifying images. These systems can pick out the most humanly similar image more accurately when compared to other tools, including ones that have been lauded by the entertainment industry.

The movie industry now uses several tools, including the structural similarity (SSIM) algorithm for estimating the perceived quality of an image or video, for this purpose. This method represented a major improvement over more traditional approaches such as peak signal-to-noise ratio (PSNR).

SSIM was recognized with numerous rewards for that contribution, including an Emmy. It is not perfect, however, and now the Adobe research team and collaborators’ technology is achieving better results.

“Simple neural networks already perform much better than SSIM and mimic better the way people see,” says Shechtman. That is even true for neural networks that are “self-supervised”—ones that are not given labels and tags to help them understand specific things in advance, but learn on their own by performing a proxy task, such as predicting the color of grayscale images. (The paper’s title is a nod to a celebrated 1960 scholarly article, “The Unreasonable Effectiveness of Mathematics in the Natural Sciences,” which argued that mathematical concepts have applicability far beyond the context in which they were originally developed—much like neural networks in this case.)

Hard-to-Fool Networks Yield Human-Like Responses

Previous methods, for example, often prefer images that match the correct colors, but that are blurry. This, in turn, leads to blurry results from image generation tasks. By using a perceptual similarity metric, researchers can teach deep learning networks to pay attention more to things that people care more about, such as blur, and less to things that are harder to notice, such as subtle image shifts.

The use of self-supervised networks shows just how “smart” these systems can be, and it opens up new possibilities for future work. “With a self-supervised system that doesn’t need to start with labels, you could theatrically train it on infinite data—and we expect the quality of most deep learning methods to improve with more data,” says Wang.

The research team has also made an important contribution to advancing machine learning in computer vision. They created a large new dataset to support this work, one that contains 160,000 examples from 425 distortion types, which they are releasing to others. That promises to provide researchers the chance to explore better perceptual metric in the future.

Does this mean computers are getting even closer to being able to see things as humans do? In a narrow way, yes, though there are still critical problems to be solved, the researchers say. In any case, the work highlights the connection between the human brain’s visual system and neural network-driven computer vision.

“There is new research that suggests that the progressive layering of neural networks may share similarities with visual representations in our brains,” says Wang.

Which image seems closer to the original? Neural networks select the same one that humans do. Previous image-similarity methods pick the blurrier image.

Contributors:

Richard Zhang, Eli Shechtman, Oliver Wang (Adobe Research)

Phillip Isola, Alexei A. Efros (University of California, Berkeley)

Illustrations by Qi Zhou