By Senthil Purushwalkam (CMU), Abhinav Gupta (CMU), Danny Kaufman (Adobe Research), Bryan Russell (Adobe Research)

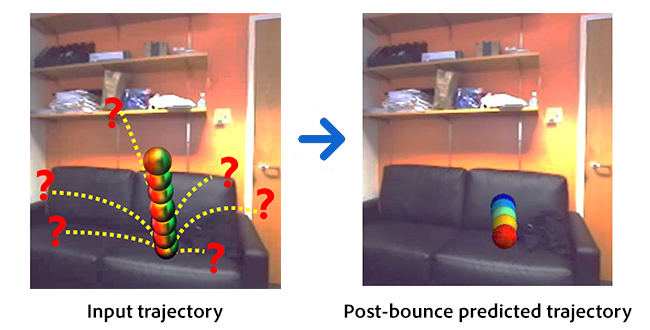

Think about the last time you tossed a ball. If you were in a room full of furniture, you likely thought about what would happen next. In the scene pictured below, you’d make a guess about the ball bouncing off the sofa and likely flying forward in space, not toward the left, right, or high in the air. You would also infer that the sofa is soft and would slow down the speed of the ball.

We know how to reason in this way because of our personal history of interacting with our everyday environments, where we watch the outcome of our interactions. Now, we would like to teach computers to learn to make predictions in a similar fashion about everyday real-world interactions.

The ability to make such predictions could enable applications in many areas. For example, imagine an augmented reality application where you could throw virtual objects in your current environment, and the objects’ actions would obey the physics of the underlying scene. Such an application could also enable artists to create new experiences where users could more realistically interact with their environments in augmented reality.

In our work, “Bounce and Learn: Modeling Scene Dynamics with Real-World Bounces,” which will appear at the International Conference on Learning Representations (ICLR) 2019 and is in collaboration with Carnegie Mellon University (CMU), we show how to train a neural network to predict forward trajectories given an observed trajectory of an object before making contact with a surface.

Figure 1: Our approach learns to predict the trajectory of a ball after it bounces off a real-world surface.

Previous approaches have worked only in small-scale settings, such as closed laboratory environments, or in simulation. We teach the computer about real-world interactions in a unique and playful manner—by showing it numerous real-world examples of a foam ball being thrown around in many different environments.

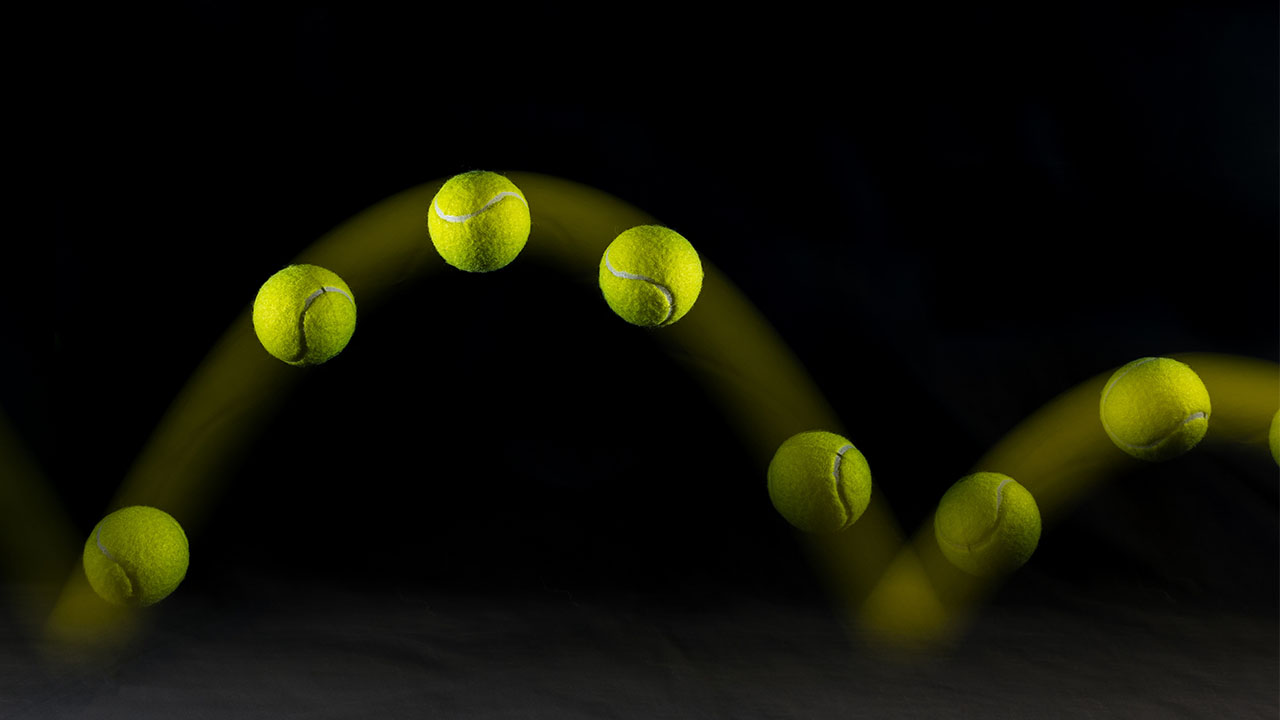

Figure 2: Example ball bounce interactions. We seek to have a computer learn from watching these interactions.

Moreover, we show how the computer can infer two underlying physical properties governing bounces given an observed input trajectory—restitution and collision normals.

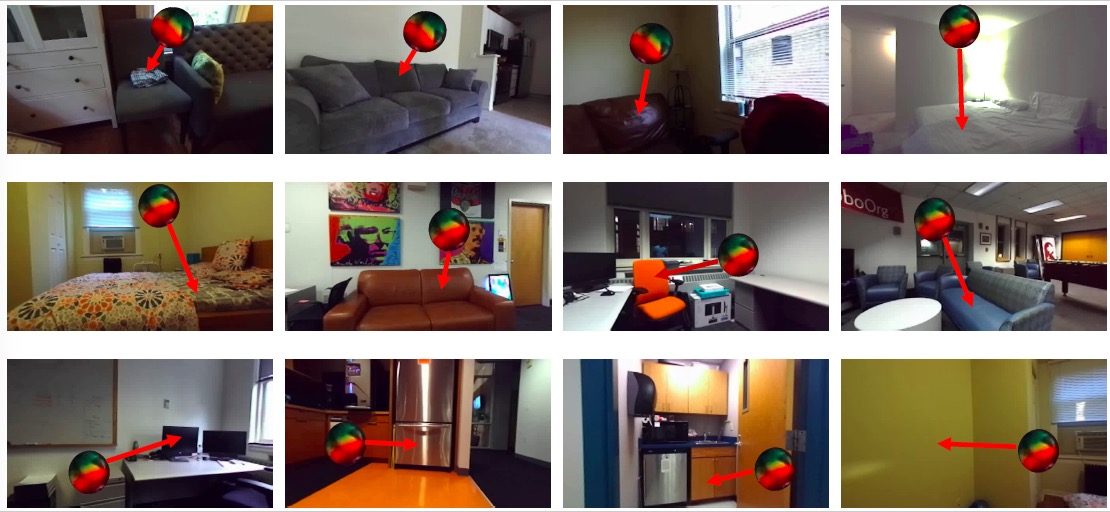

Below, we show our new dataset of real-world bounces we have collected over a variety of different everyday scenes, the largest of its kind, which we used to train our neural network. Notice that the dataset spans a variety of different everyday scenes and surfaces with varying geometry.

Figure 3: We have collected a large dataset of real-world bounces, which we use to train our system.

Using our collected dataset of bounce interactions, we trained a novel neural network for our forward-prediction task. Our neural network consists of two parts—a physics inference module, which makes forward predictions given estimates for the restitution and collision normal and the input trajectory, and a visual inference module, which estimates the restitution and collision normal based on the visual information of the input scene.

Figure 4: Our forward prediction results. Notice how we successfully predict forward trajectories over a variety of different real-world surfaces.

We show forward predictions from our model in Figure 4. Notice how we are able to make successful predictions over a variety of different surface interactions.

While restitution and collision normals are important for characterizing real-world bounces, they only offer a first-order approximation of bounce interactions. Real-world bounces are very complex as a number of additional factors may significantly influence the outcome of an interaction, such as surface deformation or whether the surface is “stochastic,” where the post-bounce trajectory may be randomly distributed (e.g., a rocky surface). Learning to reason about such interactions is future work.

In summary, we have introduced a new approach to predicting forward trajectories of real-world ball bounces in everyday scenes. Our work is an effort in the direction of understanding real-world physical interactions. This work could have impact for augmented reality applications. Our approach could also have applications in robotics where an agent needs to be able to physically interact with everyday environments.

For more details of our approach and to view more results, please see our project webpage and paper.

Contributors:

Danny Kaufman, Bryan Russell, Adobe Research

Senthil Purushwalkam, Abhinav Gupta, Carnegie Mellon University (CMU)