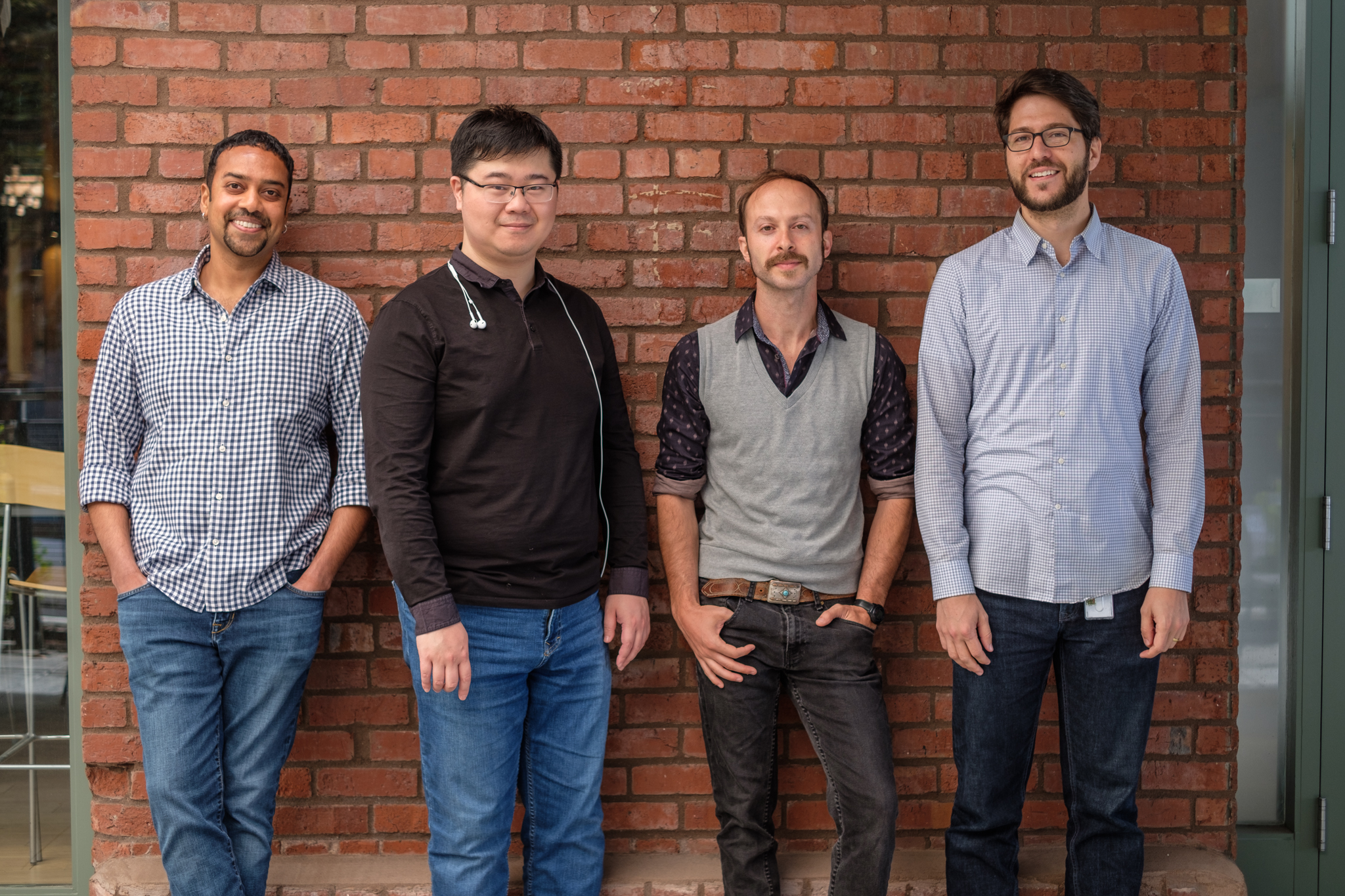

Adobe’s creative tools go well beyond pixel-based media, and audio is becoming more important at Adobe than ever before. Audio research has been conducted at Adobe Research for several years, but the last year has seen significant growth in the area through the formation of the Audio Research Group—Zeyu Jin, Nick Bryan, Justin Salamon, and led by Gautham Mysore.

Innovative audio research in the works could enhance audio production, storytelling, music generation, augmented reality, animation, and more. “The group is focused on solving real-world audio problems with a strong scientific component, often involving deep learning and other state-of-the-art approaches,” says Mysore.

“We are re-imagining audio technology to empower people to tell their story—without the tools getting in the way,” he explains. The group is working to dramatically simplify the creation of audio content, while still maintaining high production value. To do this, they are developing intelligent algorithms to go “under the hood.”

“This research focus could democratize audio content creation,” Mysore says. For example, new tools could enable significantly more people to create spoken word content, such as podcasts, without any knowledge of audio editing and production. Technologies could also allow creators to lay down high quality musical tracks without knowing how to play a traditional instrument—or having the production skills of a sound engineer.

One of the keys to advancing work in audio today is a focus on artificial intelligence, broadly defined. New innovations will enhance audio workflows, but humans will still make the creative decisions, researchers say. “What I love about our work is that we develop intelligent tools to empower creative people, not replace them,” says Salamon.

“We’re a group of musicians with a passion for sound, and we practice headphones-on machine learning and signal processing,” Mysore explains. “It is often as much of an art as it is a science and we carefully frame research problems by treating audio as sound, not just raw data.”

Audio work extends well beyond manipulating sound in isolation. The group is also tackling problems at the intersection of audio and video. Some examples include automatically suggesting audio content to match video, simplified creation of soundtracks, and better video search jointly using audio and video. Some of this work is in collaboration with video researchers. This thread of research also extends to creating new augmented reality experiences.

Some of the problems being tackled by the group are related to traditional audio research areas such as speech enhancement, music information retrieval, speech and music synthesis, spatial audio, and audio event detection, but often with a twist, due to the nature of the applications being addressed. Adobe has a growing emphasis on the human side of using technology, and thinking through the human computer interaction (HCI) aspects of audio problems often leads to new breakthrough experiences and applications, which helps the group define core algorithmic problems.

Adobe’s path-breaking audio work has already gained recognition from the audio research community. Audio research papers from Adobe Research, often with academic collaborators, have earned best paper awards at conferences including Interspeech, ICASSP, ISMIR, and LVA/ICA, as well as an AES student design competition gold award.

“We have one foot in industry and one foot in academia, and collaborate extensively with university faculty and PhD students,” says Mysore, highlighting the unique role that research scientists play at Adobe. They have the intellectual freedom to choose their own projects, which is key to curiosity-driven research.

The summer internship program is one of the highlights of the group, and Adobe Research in general. “We are fortunate to have some of the world’s top PhD students in the field come and work with us. Our interns are exposed to real-world problems in context,” Mysore explains.

The group recently welcomed the largest-ever cohort of Adobe Research audio interns— ten, primarily PhD students. Most intern projects lead to top-tier academic publications and often lead to patent applications. Several intern-launched projects have become features in products as well, potentially reaching millions of customers. Interns also sometimes have the opportunity to present highly visible demos at the Adobe MAX Conference main-stage “Sneaks” alongside celebrity hosts, as done by Zeyu Jin and Nick Bryan during their own internships.

The group often collaborates with Juan-Pablo Caceres, a research engineer who works on transforming audio research into product-ready software, Paris Smaragdis, a professor at University of Illinois Urbana-Champaign (and part-time at Adobe) who works on a broad range of audio research problems, Tim Langlois and Dingzeyu Li, who bring their computational modeling skills to problems in augmented reality, 360 video, and immersive audio, Tim Ganter and Celso Gomes, who work on rapid prototyping and design, particularly in the audio space.

As researchers figure out how to blend uniquely human creativity with advances in machine intelligence, new tools could serve as critical partners in an enhanced creative process. This will be more important than ever before for audio content creation. The Audio Research Group at Adobe and its collaborators will drive that technology forward to impact a wide range of creators.