Few-Shot Sound Event Detection

IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP)

Publication date: May 4, 2020

Yu Wang, Justin Salamon, Nicholas J. Bryan, Juan Pablo Bello

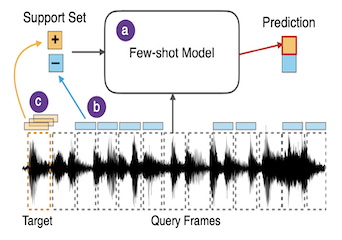

Locating perceptually similar sound events within a continuous recording is a common task for various audio applications. However, current tools require users to manually listen to and label all the locations of the sound events of interest, which is tedious and time-consuming. In this work, we (1) adapt state-of-the-art metric-based few-shot learning methods to automate the detection of similar-sounding events, requiring only one or few examples of the target event, (2) develop a method to automatically construct a partial set of labeled examples (negative samples) to reduce user labeling effort, and (3) develop an inference-time data augmentation method to increase detection accuracy. To validate our approach, we perform extensive comparative analysis of few-shot learning methods for the task of keyword detection in speech. We show that our approach successfully adapts closed-set few-shot learning approaches to an open-set sound event detection problem.

Learn More