RRM: Relightable assets using Radiance guided Material extraction

CGI 2024

Publication date: July 5, 2024

Diego Gomez, Julien Philip, Adrien Kaiser, Élie Michel

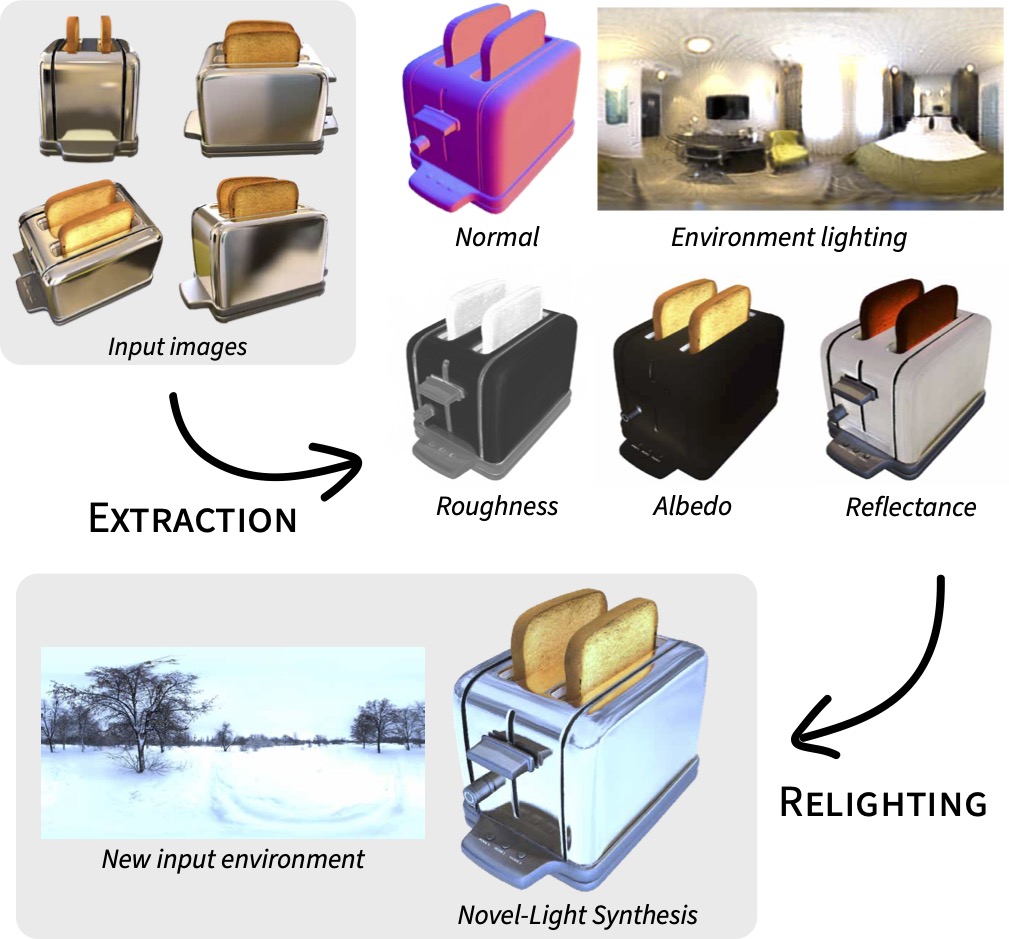

Novel view synthesis has seen tremendous improvements over the last few years thanks to the rise of neural representations of light fields, shifting the paradigm for the acquisition of 3D data from photographs. However, these radiance-based approaches typically lack the ability to synthesize views under new lighting conditions. Recent efforts tackle the problem via the extraction of physically-based parameters that can then be rendered under arbitrary lighting, but they are limited in the range of scenes they can handle, usually mishandling glossy scenes. We propose RRM, a method that can extract the materials, geometry, and environment lighting of a scene even in the presence of highly reflective objects. We design a physically-aware radiance fields representation to supervise the diffuse and view-dependent components of a physically-based module that uses Multiple Importance Sampling to grasp the complex behavior of glossy indirect lighting as well as feed our expressive environment light structure based on Pyramids of Laplacians. We demonstrate that our contributions outperform the state-of-the-art on parameter retrieval tasks, leading to high-fidelity relighting and novel view synthesis on surfacic scenes.

Learn More